Google's standard for accessing dynamic content (for history)

Google proposed in 2009 a standard to allow search engines to access the content of dynamically generated Web page with Ajax, because Web applications are expected to grow exponentially in the near future.

In 2015, the search engine changed its opinion and explained it in an article entitled Deprecating our AJAX crawling schemes. (The actual name is Ajax and not AJAX but there are preconceptions that we can not eradicate.)

From 2015, it is sufficient that the robot can access JavaScript and CSS files for it to be able to index dynamic content, the article says (this depends heavily on the script). If the site follows the principle of progressibe enhancement, that content is gradually added by JavaScript, the robot could access these data and take them into account for the indexation.

The 2009 method is no longer relevant, except to know what it was. Let's use pushState, it is the new recommanded method.

Using pushState

If your audience is using a recent browser other than IE 9, you can dispense with all that has been described previously and simply use the pushState method of the history object. Its role is to artificially create a URL to a new state of the page.

The parameters are: an object that stores data on the status of the page, a temporary title, a temporary URL. Example of use:

state1 = {var ref: "info"};

history.pushState(state1, "New Title", "new-page.html");When the user triggers the action that build the new state of the page, the URL changes and it can be indexed separately. It remains to write code that will make this URL accessed directly!

For the record, the 2009 segment method

When the content of an Ajax page changes with JavaScript code, it creates a new state, and Google plans to index all possible states of a dynamic page so through links in its index which refer to the corresponding states of the page.

To make this possible it requires each state to match a fragment of the form:

https://example.com/mypage.html#fragmentThis is something that is generated automatically by Ajax frameworks or HTML 5.

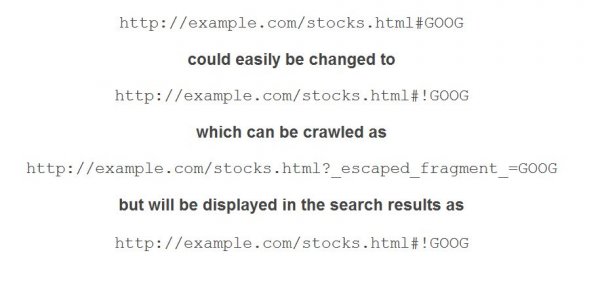

However, a fragment does not return a HTTP code, and to overcome this, Google must convert it artificially in the form of a request. Requests return a code.

https://example.com/mypage.htm?_escaped_fragment_=fragmentThe text of the fragment is now the value assigned to a generic parameter. This is invisible to the user.

Understanding the system

- The user creates a static page. It may contain dynamic links and anchors.

https://example.com/index.php?query#anchor - We know that Google can now reference internal links, see the evolution of Google algorithm (September 25, 2009). Provided that there is a link in the page, which is not the case with dynamic content.

- But dynamic content is also available to the user in this format.

https://example.com/index.php?query#currentstate - In addition, JavaScript can change the page content either initially or at the request of the user.

- But crawlers can only see this code:

<script src='showcase.js'></ script> - If a search engine tries to interpret the JavaScript code, the result will be unstable.

- Google initially proposed that the server interprets the JavaScript code and creates a URL for the page state changed by JavaScript.

But it seems this is actually performed by the crawler itself. - A URL is generated for the corresponding state of the page in the form:

https://example.com/index.php?_escaped_fragment_=currentstate - But it would be displayed in this form, more readable;

https://example.com/index.php#!currentstate - The crawler generates a URL difficult to read. The robots and browsers will convert a readable URL into this ugly URL.

- Only the pretty URL is accessible to the user and is included in the Google's index.

This standard format is now recognized to the conversion by all actors to the Web.

Summary from the slide

How to make an Ajax page crawlable?

The principle is posed, now what should do the webmaster for his Ajax page enters the system and are recognized by Google as crawlable?

The page itself should have this Meta:

<meta name="fragment" content="!">When the Ajax script creates a new state, so when it changes the content of the page, it must create a fragment that is added to the URL of the page (separated by #) and replace it. This helps to retain the use of the back button.

The way to make this fragment depends on your Ajax framework.

If the page contains links on page states, which are generated by the Ajax code, these links should contain a fragment and the exclamation point:

<a href="#https://example.com/showcase.html#!checkbox