Understanding Panda, Google search method

Why the change in the algorithm impacted so much websites...

The change in the Google ranking, called Panda from the name of an engineer, impacted 11.8% of US website with poor content, not original or not very useful.

"We want to encourage a healthy ecosystem..." Google said.

Criticism that the firm has suffered - we remember the joke on April 1 Yacht Adsense of the CEO of Demand Media - wronged the search engine and it should react.

Panda was a program launched manually from time to time by Google to assess the "quality" of sites, that was then integrated to the organic algo in January 2012.

It calculates a modification factor for a site in order to change the previous ranking of pages based on other criteria.

No other criteria of the algorithm does alter this score.

How the Panda algorithm change results

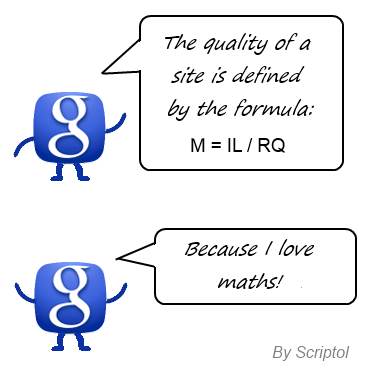

Theoretically, Panda makes the difference between a quality site and a site without interest as so:

Google’s algorithm looks for authoritative sites, consistently offering new information and innovative statements contrary to the one churning out five hundred words about a topic with no special background on it.

Another more recent quote:

Demoting low-quality sites that did not provide useful original content or otherwise add much value.

This sentence is self-descriptive. It is itself that said what is a low-quality site. But webmasters are looking for more precise criteria. Here is how Google assess the "quality" of a site, based on infos given by patent 8,682,892:

- Panda is to count the links to a site from sources independent with each other and to the site, then count queries from unique visitors (over a period or without limitation of time depending on the case) to the pages of the site. A ratio between the two counts is calculated, this gives the following formula: M = IL / RQ.

And this ratio is used to multiply the initial score prior for each page before ranking and displaying the results.

M = modification factor of the initial score,

IL (inbound links) is the number of independent backlinks,

RQ (resources queries) is the number of queries bringing the pages of the site in the results.

(Ref: USPTO 8,682,892). - Panda penalizes websites whose traffic comes mostly from search engines. Which have few backlinks.

- A unoriginal content - even a completely different article but repeating the same ideas (keywords actually) - is penalized because it gets few links.

- A shallow or unhelpful content does not get backlinks.

- A poorly written content.

- The design of a site is not directly taken into account by the algorithm but it could influence linking.

- The fact that many users block a site in search results, is considered as a negative signal by Panda since the second iteration of April 2011. It's official. (But Google is trying to separate spam from the genuine). This has been added to the original method described in the patent.

- Pages of categories or tags, lists of pages, it is not new, but now the whole site is penalized.

- A global modification factor is assigned to the site. If a part of a site is affected by these criteria, the overall ranking of the site will suffer, all the pages will be demoted.

- A group can include multiple sites of the same webmaster: the score is modified as a whole.

- Pages penalized are then less often visited by crawlers (according to Matt Cutts). You can therefore by looking at the logs verify that this method was applied.

Panda was designed as a separate program because it requires vast resources to partition the Web in group of ressources and to compare them.

With Panda, Google wanted to radically change the actual role of the search engine: it does not want that the results can contribute to the promotion and success of a site, now, a site must obtain its audience only from links it receives and if he gets some success, then the engine could put it on top, depending on other ranking factors.

The discourse that was given on quality was misleading: it is just a matter of popularity, as popular sites always get lots of links whatever they publish, often infos taken from other sites. Reading the patent also shows that Google pays little attention to originality: a fully copied content can be better positioned than the original if it gets more independent links.

What to do when hit by the Panda update?

From Google:

"Low-quality content on some parts of a website can impact the whole site’s rankings, and thus removing low quality pages, merging or improving the content of individual shallow pages into more useful pages, or moving low quality pages to a different domain could eventually help the rankings of your higher-quality content."

But all experts agree that it is not possible to cancel the penalty without to change the content of existing pages and adding new content.

Merging two pages with a banal content will make a new bigger page with a banal content, it will not solve anything.

The effort of the Webmaster must be focused on getting independent backlinks.

- Ideally a site maximizes the Panda formula if has many backlinks and no content. A service could match this schema. But Panda is only a factor that amplifies an initial score that depends on the content.

- Removing all pages that do have backlinks is certainly an effective way to improve its ratio and thus recover. Or exiting the index with a noindex meta tag.

- For pages whose engine cannot understand the interest for the user, you may enrich their content. But not if they have a lot of backlinks, changing the content could triggers other penalties (unless it brings them new bl).

- To get genuine backlinks, make sure your content offers something useful and unique (make a search for similar content). Always ask what your page offers more than others.

- Personalize the content. Use your own words. And, I talk to bloggers, remember your essays in school, the teacher did not ask you to copy the subject or the answer of someone else, but to give your own ideas. Use different view points to appear authorative and not biased.

- Take care of the user experience, the incentive to see more pages or to return to the site.

- For pages that have no chance of getting backlinks, or do well in the SERPs, make them dynamic and therefore invisible to the engines while answering the questions of visitors. This is what we do here with the dictionary (button on the top right), through the use of Ajax.

- Watch the exit rate in Analytics or other statistical tool. Pages that have a high exit rate penalize the site. They could be deleted or made dynamic if they have no backlinks.

- Again, do not modify pages with a lot of backlinks.

You should know that changing the existing content will not suffice to undo the effects of the penalty because it would not get new backlinks. This is especially new unique content that will do.

This will require a lot of work, but you will be consoled in thinking to content farms which have million pages to modify...

Conclusion

The most important fact which makes the results incomprehensible to webmasters, wich has been officially confirmed by Google, is that if a part of a site is penalyzed, the whole site will be penalized. So pages of very good quality will be less well ranked in SERPs behind pages of other sites of lower quality!

It is even more difficult to accept since we know that while Google has presented its process as a means of selecting quality pages, its main effect is to promote the most important sites and increase even more their audience.

See also

- Panda patent made simple. Description of the method.

- Panda update, facts and myths. List of misconceptions, often widespread.

More infos

- Finding more quality sites. This is the official view of Google for Panda.

- Interview of Google's staff. This interview gives a historical view on the establishment of Panda and the reason that justified it, without providing precise data on the operation of the algorithm.