20 mistakes to avoid to search engines

A good match between the quality of a site and what engines want to offer to Internet users in response to their queries, this must be the goal of the webmaster.

But through clumsiness or a desire to artificially promote his site, a webmaster can make mistakes that lead that rather to be included prominently in the pages of results, its site on the contrary will be downgraded or deleted from the index!

Here is a list of things that must be avoided on a website:

- Do not build a site with frames.

Using a frameset facilitates the creation of a website in the "curse" style where you can navigate easily in the chapters. But you get the same result with a template where only the editorial content changes or by spreading the menu in pages with a script as Site Update. - Do not build a site in flash.

Flash can be used as an accessory to enliven the site, but it is poorly referenced by engines and does not facilitate the indexing of pages. - Dynamic links.

Access to content via links in JavaScript result in the lost of the advantage of internal links and can prevent crawler to index the pages, but if a sitemap is added. Unless you only want to avoid indexing pages. - Dynamic Content.

The same is true to content that is added by Ajax to the pages. This content is not seen by robots and is not indexed. Ajax should be restricted to data provided at request from visitors. - Duplicate content.

Making the same page accessible to the robots in several copies, or allowing them to access the same page with different URLs, which is the same for crawlers, causes the page to be deleted from the index. If a page is copied onto a different site, the engines could ignore the more recent copy or the one that has the less backlinks.

If there are too many duplicate pages, the engine will "think" that you are trying to stuff his index and will penalize the site. - Hidden text.

There are several ways to hide text to visitors and make it visible to robots, and they are all prohibited. Whether using CSS rules that place the text off the visible part of the page, or text color identical to the background color, these tricks are identified and the site penalized.

Google has been granted a patent, no 8392823 of a system for detecting hidden text and links. - Different displays.

Even if it is in a legitimate intent, such as on a bilingual site where different pages are submitted depending on the language of the browser, we must avoid presenting to the robots content different from what visitors see. - Meta refresh.

In the same spirit but deliberately using the meta tag refresh to show to Net surfers a content different from what the robots see is prohibited. - Pages under construction.

Leaving on a Web site pages under construction that are accessible to robots is a bad practice. - Broken links.

A significant number of broken links is synonymous of abandoned site. Engines take into account that a site is regularly maintained, see the patent about scores of pages from Google. Use regularly a script to test broken links, as the Web evolves and pages disappear or change of address. - Lack of titles.

The <title> tags are essential, they are used by engines in results pages. The title must reflect the content of the article in an informative and non-advertising form.

The description tag is essential when the page contains little text and a flash content or images or videos.

The keyword meta tag may be omitted because Google does not take it into account as it is stated explicitly in the interview. - Duplicated title.

Having the same title on all pages in <title> tag is very damaging. That's enough so pages are not indexed as you can see on forums for webmasters. Having same keywords across all titles is also to be avoided. - Doorway or satellite.

Creating pages specifically for engines with chosen keywords and content without interest, is what Google calls "Doorway pages". Forget it. A satellite page is a page created on another site only to provide links in quantity on a primary website. Forget it. - Trading links or Reciprocal links

Google asks not to trade links ("link scheme") in order to improve the PageRank of the site. Signing on directories and provide them with a reciprocal link is not something to be done. You can not be penalized for links to your site even if the directory is penalized, but you will be for links to a penalized site. Note that all directories are not necessarily penalized, only are those whose content is not moderated. - Redirecting the domain name.

Redirecting a domain name to another with a frame is a mistake. It must be a redirect with 301. See this page for the operating mode.

When you redirect the contents of a domain on another, you should keep the same structure of site and, it is an advice from Google, the same presentation. It may be later changed. - Variables in URL.

Having variables in a URL in the form: article.php?x=y does not prevent indexing, CMS forums do it with no problem. But having several variable in the URL may prevent indexing. It is often admitted that a variable called id or ID prevents indexing because it is seen as a session variable. - Over-optimization.

Most of the advices that we can give to a webmaster to make its content better indexed or ranked higher, can be perverted and misused, here come over optimization.

Most of the advices that we can give to a webmaster to make its content better indexed or ranked higher, can be perverted and misused, here come over optimization.

- Over optimization comes when the page is stuffed with keywords intended solely to the engine, without bringing anything new to the user. Engines are perfectly able to distinguish originality and relevance of a page.

- We over optimize again by using the same anchors in links on the site too often, putting the same keyword in the title in the URL, subtitles, alt tags, etc.

- Creating self-links in directories without visitors, in forums, with unnatural anchors intended clearly to engines. In fact, the Penguin filter is precisely directed against it.

- Hidden text in order to deceive engines is an old black hat technique that is part of what is considered over-optimization.

- H1 tags, H2 are required to organize a text and make it more readable. They are taken into account by the algorithms. But if there are too many tags and not enough text, it is still over-optimization. In fact blogs now tend to make pages without subtitles for fear of penalties, which actually makes them unreadable.

- Similar pages with the same keywords. Or text added only to place keywords. There are a number of signals that the algorithm can use to judge the value of content.

Can be generalized to all aspects of the site. Is it made for visitors or for search engines?

It is not always clear when a site is over optimized or not. When are there too many keywords, similar anchors? It is best to compare your pages with those produced by the reference sites in your domain to judge. - Double redirect.

Make a link on a page of another site, which leads to a redirection on the starting site (this can happen when the sites are merged), leads inevitably to the deindexation of the page containing the link. - Simple redirect.

There are quite legitimate actions that may nonetheless cause a downgrade. Frequently moving pages on a site with a 301 redirect is one example. It is a method used by spammers: redirect visitors from one page to another to get its traffic. When you reorganize your site and use 301 directions on different content, or even a different layout, you trigger a negative signal which causes a penalty. - Blocking access to CSS and JavaScript to robots

If a directive in robots.txt prohibits Google crawlers to parse CSS and JavaScript files, the site will be penalized, as have experienced several important sites that have lost two thirds of their visits, but regained their traffic after the blocking removed. This penalty appeared in 2014.

Google wants to be able to view the pages as does the user, because CSS or scripts can change the content displayed.

A last mistake: Forgetting to read the guidelines. Any webmaster who relies on Google's services to improve traffic to its Web site must read the guidelines to webmasters.

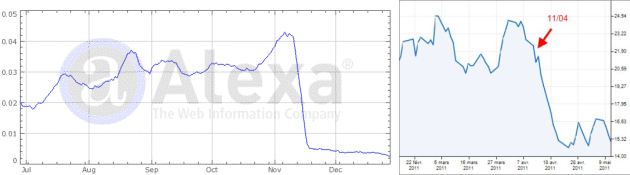

Traffic drop

Conclusion

Although the list seems long, applying the rules is not difficult. If one focuses on the content, it is enough to build a site in the rules to get the place it deserves in the results, depending on its content and its originality.